Apache Kafka agent

Apache Kafka agent

Target Apache Kafka

Prerequisites

To have Gluesync working on your Kafka instance you will need to have:

-

If Kafka is not configured to automatically create topics then: as many topics are created one per each of the entities you’re looking to source changes from;

-

Valid user credentials with permission to post to the above-mentioned topics;

-

Valid certificate in case of SASL authentication.

Setup via Web UI

-

Hostname / IP Address: DNS record of your cluster or IP Address of one of the nodes (automatic discovery of all other nodes is then applied).

-

Port: Optional, defaults to

9092. -

Username: Username with read & write access role to the cluster.

-

Password: Password belonging to the given username.

-

Enable Tls: (optional, defaults to

false) Enable or disable the usage of TLS encryption; -

Tls certificates: (optional) File browser to let you upload your certificates;

-

Certificates password: (required if auth in place, defaults to

NULL) The password used to lock your certificate; -

Disable auth: (optional, defaults to

false) Disable authentication over Kafka broker.

Custom host credentials

This can be also set via Rest API by specifying customHostCredentials param.

-

saslMechanism: (required if auth in place, defaults to

SCRAM-SHA-256) Accepts the following parameters:-

GSSAPI, -

OAUTHBEARER, -

PLAIN, -

SCRAM-SHA-256, -

SCRAM-SHA-512.

-

-

securityProtocol: (required if auth in place, defaults to

SASL_SSL) Accepts the following parameters:-

PLAINTEXT, -

SASL_PLAINTEXT, -

SASL_SSL, -

SSL.

-

-

certificatesType: (required if auth in place, defaults to

PEM) Accepts the following parameters:-

PEM, -

JKS.

-

Specific configuration

-

useTransactions: (optional, defaults to

false) If set totrueit enables the usage of Kafka transactions, look at this documentation to know more about it.

Setup via Rest APIs

Here following an example of calling the CoreHub’s Rest API via curl to setup the connection for this Agent.

Connect the agent

curl --location --request PUT 'http://core-hub-ip-address:1717/pipelines/{pipelineId}/agents/{agentId}/config/credentials' \

--header 'Content-Type: application/json' \

--header 'Authorization: ••••••' \

--data '{

"hostCredentials": {

"connectionName": "myAgentNickName",

"host": "host-address",

"port": 9092,

"username": "",

"password": "",

"disableAuth": false,

"certificatePath": "/myPath/cert.pem"

},

"customHostCredentials": {

"saslMechanism":"PLAIN",

"securityProtocol":"SASL_SSL"

}

}'Setup specific configuration

The following example shows how to apply the agent-specific configurations via Rest API.

curl --location --request PUT 'http://core-hub-ip-address:1717/pipelines/{pipelineId}/agents/{agentId}/config/specific' \

--header 'Content-Type: application/json' \

--header 'Authorization: ••••••' \

--data '{

"configuration": {

"useTransactions": true

}

}'Topics management

To push changes feed to a Kafka topic this has to be created first and the Gluesync user is authorized to write and publish messages to it.

We also suggest you set the automatic creation of topics to let Gluesync manage the creation of every needed topic.

Messages format & keys

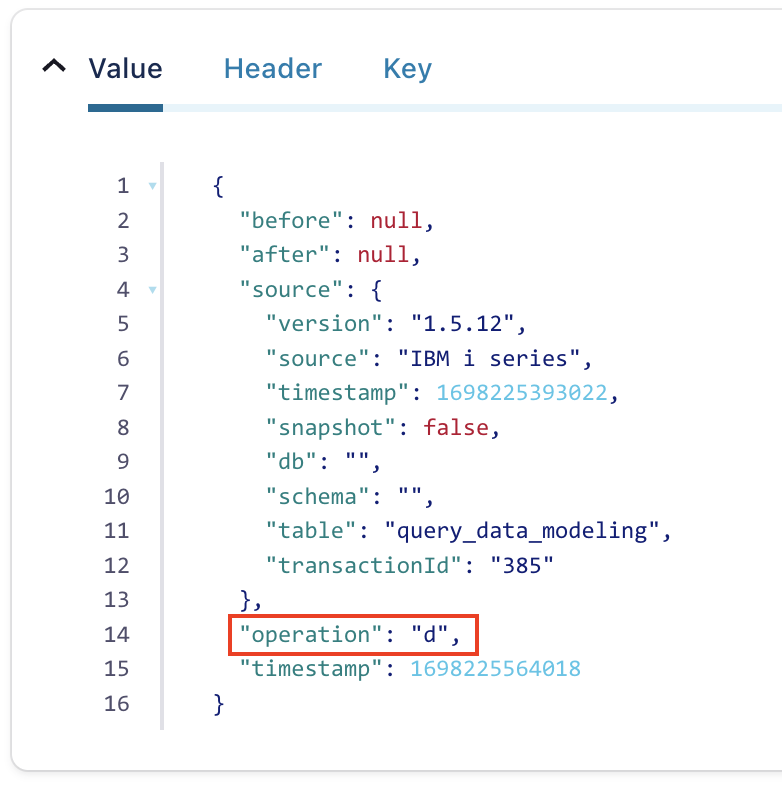

Gluesync encapsulates the message in JSON format into the defined Kafka topics, the message structure looks like the following represented by the screen below.

The message format is similar to other industry products like, for example, Debezium. This has been selected as a message format to guarantee a seamless experience for users switching over from other platforms in favor of Gluesync.

| For delete operations the document body will be sent empty while the key field will still be valorized with the document key belonging to the delete operation. This is called a Tombstone record. |