Gluesync - Real-time data replication for RDBMS, NoSQL & data lakes

Core concept behind Gluesync’s architecture

Gluesync, a data replication suite by MOLO17, has been designed from the ground up to be a stateless service acting as a middleware tier application capable of connecting to source and target database systems without having to store information within itself.

Gluesync is entirely written in Kotlin language compiling Java binaries. It is usually shipped through containers but can work even in bare-metal environments installed as a Java application that runs as a system service in Linux, Mac and Windows operating systems.

| Gluesync in its Enterprise Edition (EE) is a tool deployed in a user’s premises, meaning a user-owned data center or private cloud, and it is not available as a software-as-a-service or through a managed service. |

Being a stateless middleware enables Gluesync with ease of deployment as well as off-the-shelf security measures we are covering in the following sections.

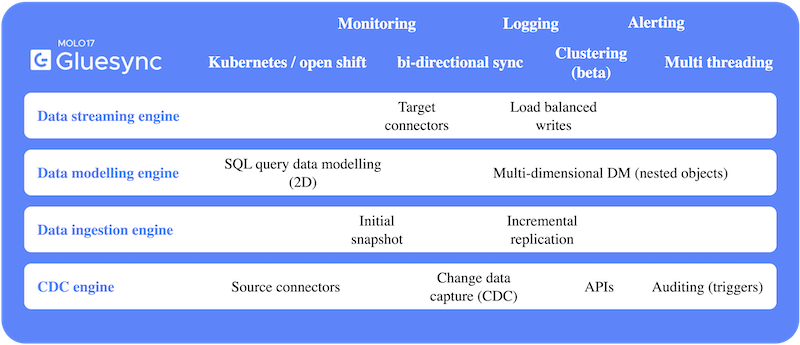

Speaking about what’s inside the middleware we can think of Gluesync as a multi-layered application: we can identify 4 main components: source connector, target connector, data ingestion engine and data modeling engine.

From the picture here below we can see their representation and better understand how the information flows between the different application layers.

CDC Engine

Responsible for the "first mile" connection between any of the compatible sources and the Data ingestion engine.

It supports encrypted communication between itself and the source database, issuing TLS certificates and any other available secure communication implementations made available by the source database vendor.

It implements JDBC driver connection to any compatible source database as well as vendors SDK in case of Non-JDBC compatible data sources like for example NoSQL and data lakes repositories. Security of information here is given by each vendor’s JDBC drivers / SDK issued by the respective maintainer. It is important to note that Gluesync doesn’t make use of any custom driver or SDK, MOLO17 strictly follows only vendors' issued drivers and SDKs.

Implements auto-recovery of connectivity losses from source and part of the two-phase commit approach that is part of Gluesync’s built-in consistency.

For a complete list of drivers and SDKs, the team is available to share specific versions and download links being used per each of them.

Data streaming engine

Similarly to the CDC Engine, this “last mile connector” is responsible for the task of enabling the Gluesync data modeling engine to connect and store data in the destination repository.

It implements an algorithm to load balance writes across multiple nodes (when available and supported) to the target cluster (if any).

It also implements buffer queues to maximize efficiency when pushing data to the target, as well as auto-recovery of connectivity losses from the target and part of the two-phase commit approach that is part of Gluesync’s built-in consistency.

Every consideration being mentioned for the source connector applies here 1:1.

Data ingestion engine

This core module is responsible for ingesting in real-time the data feed coming from the source connector. It has a basic understanding of the data structure but does not introspect the data itself since it is not needed for doing its tasks.

It plays the role of spreading the load of ingested data feeds across multiple threads and ensuring transaction consistency across the entire system’s flow.

It keeps track of the advancement of each successfully confirmed transaction making sure the “Gluesync state preservation table” is well maintained in shape.

It also decides if a full snapshot of the data has to be done or if it is time to start capturing real-time data changes based on the Gluesync state preservation table status.

Data modeling engine

The data modeling engine has the responsibility to perform all-in-RAM tasks for modeling data according to the user’s needs.

It keeps a map of the structure of the data models issued/discovered (if supported by the source connector) when connecting to the source repository and maps the incoming changes-feed or the incoming bulk data loads from the initial snapshot to the exact representation that the target data store expects to receive. We can think of it like a real-time ETL job handler/executor.

It does not need to introspect data context since it just cares about the data format (ex. dates, numbers, strings…) to convert it into the desired destination format. Encrypted data will remain encrypted as well as binary data will remain binary since no introspection is implemented and bundled within Gluesync.

Born for the container’s era

One of the main considerations we took when designing the Gluesync architecture is its native ability to be upscaled and deployed with ease, just like you used to do with your container-based applications: pull-config-deploy-enjoy. That’s the motto. You gain full control of what happens under the hood without keeping you into playing with GUIs that wouldn’t have allowed you to harness the full potential of this data replication suite.

Gluesync is being shipped through containers, if you haven’t already read about containers you can have a look here at this link that points you directly to the official docker’s homepage for a reference about a common container environment.

| This doesn’t mean that if you can’t run a containerized environment into your infrastructure you can’t run Gluesync, on the contrary! You can ask our team to provide you with the package for your specific destination platform to run it even on-prem in bare metal servers. |

Here the following diagram represents an architectural overview of a Gluesync environment.

| You can read more about Gluesync’s native approach by looking at our blog article The Gluesync Journey. |

Design

The design concept that has been adopted has taken into consideration the purpose of each core functionality provided by the suite: it provides the ability to replicate data from a relational database to a non-relational database and vice versa. These two aforementioned functionalities are called "ways" or "directions".

So, rather than having a monolithic single piece of general-purpose software, we have decoupled its functionality into an auto-consistent and highly-resilient and specialized service per each "direction". Capable of replicating, logging, alerting and being monitored by itself without the need for a master central authority that could only have increased complexity and introduced a single point of failure into the overall architecture.

Being that said the result and outcome for our users is the ability to decide what to deploy per each use case: you have the control over the decision to deploy only the module to replicate data from MS SQL Server to MongoDB or just the vice versa due to your specific use case. In that way, you’re going to have the fine-graned control over permissions, security and performances that you deserve from a product made for real-world production use cases.

Understanding CDC vs GDC

When talking about sourcing changes from a relational database there are just a few ways to accomplish the task of auditing writes, updates and deletions performed at field-row level. The most common approach, but also the most challenging, is reading from the database transaction logs that luckily nowadays are wrapped around an API layer called CDC - Change Data Capture - which provides a way for application developers and DBAs to read through it and understand the entire history of the changes that have been made from a certain time frame.

We used the term "challenging" because every database vendor has implemented its way to expose these logs and building a tool capable of being compatible with all of them is indeed a challenge by itself and sometimes specific vendors or database versions (especially older ones) don’t provide either CDC or low-level APIs to grab transaction logs from it.

To provide wider compatibility on capturing real-time change streams from the vast majority of relational databases out there in the field we decided to develop a fine-tuned subset of UDFs (user-defined functions) that together with a set of triggers helps Gluesync to enlarge the compatibility base with relational databases while maintaining a safe, fast and secure approach with which entire lifecycle is entirely managed by its engine itself. We called this feature GDC - Gluesync Data Capture - used for certain kinds of database brands | versions | editions that do not currently (or at all) the native CDC technique or for those whom we initially decided to make compatible first through that feature and then to provide CDC out-of-the-box in the upcoming future.

Naming conventions | Product modules

As naming conventions to nickname each type of Gluesync direction we adopted the following nomenclature per each replication component:

-

from relational (RDBMS) to non-relational databases (NoSQL) has been labeled SQL to NoSQL

-

from non-relational (NoSQL) to relational databases (RDBMS) has been labeled NoSQL to SQL

-

from non-relational (NoSQL) to non-relational databases (NoSQL) has been labeled NoSQL to NoSQL

| NoSQL to NoSQL data replication is a newly added feature in this version. |

What you’re going to need

Before proceeding, for each Gluesync instance that you’re willing to deploy, please check if you have the following information:

-

RDBMS connection details, like:

-

Username

-

Password

-

Connection string (IP address/port)

-

Table names

-

-

NoSQL database connection details, like:

-

Destination, either bucket or database

-

Connection string (IP address/port)

-

Username

-

Password

-

If you’re involved in the implementation of Gluesync or just trying it out via our trial program, we highly suggest you have a visual query editor tool to bootstrap multiple data source connections, and easily import/edit / display data. The tested tools from the Gluesync product and QA teams are:

| As MOLO17 we do not provide any support on these specific tools nor do we advertise them, you are free to use the toolset that you prefer the most to connect and perform queries against your database(s). |

Ready to get started?

If you’re ready to get started we recommend you to check out this useful guide you can check out directly from here: Getting started guide.